Full code at bottom.

I have a nested array of arrays of ints and am iterating over the pairwise combinations of them and doing some math. My first attempt simply iterated over the length of a nested array and did element-wise operations. The commented out code is a branchless version that avoids branching by casting bools to ints and performing arithmetic. I was testing this if it was faster (the compiler already does the optimization it seems, at least with -O ReleaseFast):

const a = arrs.getRow(i);

const b = arrs.getRow(j);

var sum_a: u16 = 0;

var sum_b: u16 = 0;

for (0..arrs.height) |x| {

if (a[x] > b[x]) {

sum_a += 1;

} else if (a[x] < b[x]) {

sum_b += 1;

// const a_gt_b = a[x] > b[x];

// const b_gt_a = a[x] < b[x];

// sum_a += 1 * @intFromBool(a_gt_b);

// sum_b += 1 * @intFromBool(b_gt_a);

// }

}

This takes about 1 millisecond on my 9800X3D. I then tried a Vector version, but this was a lot slower:

const a: @Vector(8000, u8) = arrs.getRow(i)[0..8000].*;

const b: @Vector(8000, u8) = arrs.getRow(j)[0..8000].*;

const a_gt_b: @Vector(8000, u16) = @intFromBool(a > b);

const b_gt_a: @Vector(8000, u16) = @intFromBool(a < b);

const sum_a: u16 = @reduce(.Add, a_gt_b);

const sum_b: u16 = @reduce(.Add, b_gt_a);

Unfortunately, this takes about 19ms. I was also curious to see if @reduce also uses SIMD (like the < and > operators), so after the SIMD compares I summed with a for loop, but this was wildly slow. This takes around 33s:

var sum_a2: u16 = 0;

var sum_b2: u16 = 0;

for (0..arrs.height) |x| {

sum_a2 += a_gt_b[x];

sum_b2 += b_gt_a[x];

}

I can’t see why this loop would be so terribly slow. The fastest version I posted at the top does a similar for loop the same number of times with similar operations.

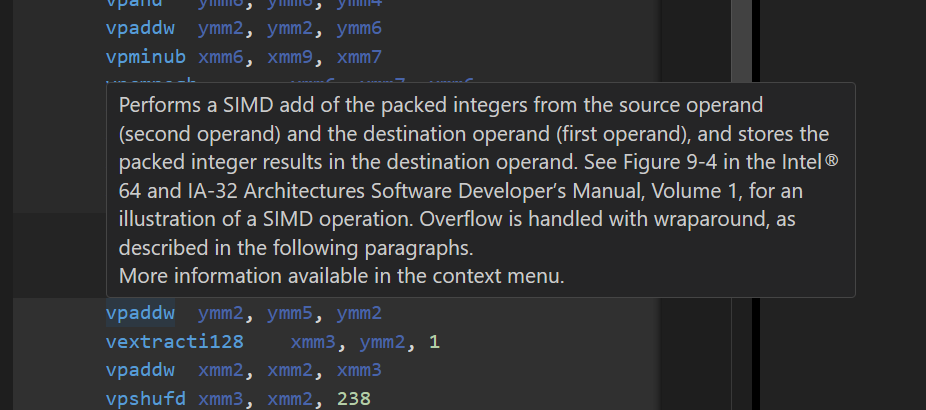

I’ve tried to view the assembly of the SIMD version and the SIMD/loop version to see what is different from the fast one, but am having a hard time getting rid of all debug info etc, so the assembly is ginormous and hard to parse.

Any advice on what’s up here? Thanks.

I’ve been running zig build-exe -O ReleaseFast src/main.zig && ./main on v0.15.0-dev.516+abbead1fb and here is my full code:

const std = @import("std");

const print = std.debug.print;

const Allocator = std.mem.Allocator;

pub fn Array2D(comptime T: type) type {

return struct {

const Self = @This();

mem: []T,

width: u32,

height: u32,

pub fn init(allocator: Allocator, width: u32, height: u32) !Self {

return .{

.mem = try allocator.alloc(T, width * height),

.width = width,

.height = height,

};

}

pub fn deinit(self: Self, allocator: Allocator) void {

allocator.free(self.mem);

}

pub fn get(self: Self, x: usize, y: usize) T {

std.debug.assert(x < self.width and y < self.height);

return self.mem[x * self.height + y];

}

pub fn getRow(self: Self, x: usize) []T {

std.debug.assert(x < self.width);

return self.mem[x * self.height ..][0..self.height];

}

pub fn set(self: Self, x: usize, y: usize, val: T) void {

self.mem[x * self.height + y] = val;

}

};

}

pub fn main() !void {

const prng = std.crypto.random;

var arena = std.heap.ArenaAllocator.init(std.heap.smp_allocator);

defer arena.deinit();

const alloc = arena.allocator();

const width = 100;

const height = 8000;

var arrs = try Array2D(u8).init(alloc, width, height);

for (0..100) |i| {

for (0..8000) |j| {

arrs.set(i, j, std.Random.int(prng, u8));

}

}

var timer = try std.time.Timer.start();

var idx: u16 = 0;

var outputs: [20_000]f32 = undefined;

for (0..arrs.width) |i| {

const a: @Vector(8000, u8) = arrs.getRow(i)[0..8000].*;

for (0..arrs.width) |j| {

const b: @Vector(8000, u8) = arrs.getRow(j)[0..8000].*;

const a_gt_b: @Vector(8000, u16) = @intFromBool(a > b);

const b_gt_a: @Vector(8000, u16) = @intFromBool(a < b);

// const sum_a: u16 = @reduce(.Add, a_gt_b);

// const sum_b: u16 = @reduce(.Add, b_gt_a);

var sum_a2: u16 = 0;

var sum_b2: u16 = 0;

for (0..arrs.height) |x| {

sum_a2 += a_gt_b[x];

sum_b2 += b_gt_a[x];

}

// try std.testing.expect(sum_a == sum_a2);

// const a = arrs.getRow(i);

// const b = arrs.getRow(j);

// var sum_a: u16 = 0;

// var sum_b: u16 = 0;

// for (0..arrs.height) |x| {

// const a_gt_b = a[x] > b[x];

// const b_gt_a = a[x] < b[x];

// sum_a += 1 * @intFromBool(a_gt_b);

// sum_b += 1 * @intFromBool(b_gt_a);

// // if (a[x] > b[x]) {

// // sum_a += 1;

// // } else if (a[x] < b[x]) {

// // sum_b += 1;

// // }

// }

const norm_a = @as(f32, @floatFromInt(sum_a)) / @as(f32, @floatFromInt(sum_a + sum_b));

const norm_b = @as(f32, @floatFromInt(sum_b)) / @as(f32, @floatFromInt(sum_a + sum_b));

outputs[idx] = norm_a;

outputs[idx + 1] = norm_b;

idx += 2;

}

}

const end: f64 = @floatFromInt(timer.read());

std.debug.print("{d}\n", .{end / 1_000_000_000.0});

}