## Preamble

Indirect calls through function pointers are a major blocker for bo…th #1006 and #6025. While a solution can be designed for the generic case, there always seems to be *some* core function pointer usage, often related to interfaces, that the breaks the generic solution. This proposal is intended to provide a way to represent some common usages of function pointers, particularly in interfaces, in a way that is both more optimizable and decoupled from various difficult design problems..

## Motivating Example

Let's consider some simple interface for demonstration purposes:

```zig

pub const Operation = struct {

vtable: *const VTable,

pub const VTable = struct {

operate: *const fn (x: i32) i32,

};

pub fn operate(op: Operation, x: i32) i32 {

return op.vtable.operate(x);

}

};

pub const addOne: Operation = .{ .vtable = &.{

.operate = &addOneOperate,

} };

fn addOneOperate(x: i32) i32 {

return x + 1;

}

pub const double: Operation = .{ .vtable = &.{

.operate = &doubleOperate,

} };

fn doubleOperate(x: i32) i32 {

return x * 2;

}

fn negate(x: i32) i32 {

return -x;

}

fn testOperation(name: []const u8, op: Operation) void {

for ([_]i32{ 1, 7, 12 }) |x| std.debug.print("{s}({d}) = {d}\n", .{ name, x, op.operate(x) });

}

const std = @import("std");

pub fn main() void {

testOperation("addOne", addOne);

testOperation("double", double);

var negate_op: Operation = undefined;

negate_op.vtable = &.{

.operate = &negate,

};

testOperation("negate", negate_op);

}

```

```

$ zig run example.zig

addOne(1) = 2

addOne(7) = 8

addOne(12) = 13

double(1) = 2

double(7) = 14

double(12) = 24

negate(1) = -1

negate(7) = -7

negate(12) = -12

```

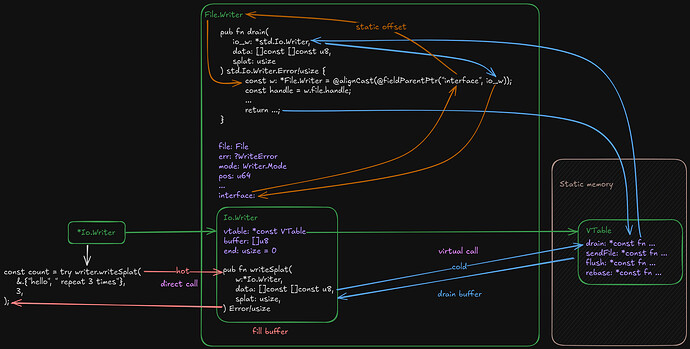

When the optimizer sees the indirect call `op.vtable.operate(x)`, it would be nice if it could know that all of the possible callees are pure, or other useful properties that hold for all callees. This would be easily determinable by iterating all of the callees, but that requires knowing all of the callees in the first place. In a more complicated example, it would be easy for an `Operation` to come from somewhere where the optimizer is not sure which vtable it holds, and then it is forced to assume that `op.vtable.operate(x);` could mutate any and all global state.

## Proposal

In pursuit of these goals, I propose having a language syntax that allows the compiler to be able to collect a complete list of all possible callees and communicate these to the optimizer at each call site. Here's a tentative syntax proposal, I'm sure it could be improved, but this is just to demonstrate the idea:

```diff

pub const Operation = struct {

vtable: *const VTable,

pub const VTable = struct {

- operate: *const fn (x: i32) i32,

+ operate: *const Operate,

+

+ pub const Operate = @RestrictCallees(fn (x: i32) i32);

};

pub fn operate(op: Operation, x: i32) i32 {

- return op.vtable.operate(x);

+ return op.vtable.operate(x); // This may only call `addOneOperate`, `doubleOperate`, or `negate`

}

};

pub const addOne: Operation = .{ .vtable = &.{

- .operate = &addOneOperate,

+ .operate = &@allowCallee(addOneOperate),

} };

fn addOneOperate(x: i32) i32 {

return x + 1;

}

pub const double: Operation = .{ .vtable = &.{

- .operate = &doubleOperate,

+ .operate = &@allowCallee(doubleOperate),

} };

fn doubleOperate(x: i32) i32 {

return x * 2;

}

fn negate(x: i32) i32 {

return -x;

}

fn testOperation(name: []const u8, op: Operation) void {

for ([_]i32{ 1, 7, 12 }) |x| std.debug.print("{s}({d}) = {d}\n", .{ name, x, op.operate(x) });

}

const std = @import("std");

pub fn main() void {

testOperation("addOne", addOne);

testOperation("double", double);

var negate_op: Operation = undefined;

negate_op.vtable = &.{

- .operate = &negate,

+ .operate = &@allowCallee(negate),

};

testOperation("negate", negate_op);

}

```

The way this works, is that some syntax like `@RestrictCallees` creates a function type that does not compare equal to other function types with the same signature. Instead, this produces a unique type in the same way as container type definitions, where a combination of the source location and captured values determines equality. Syntax like `@allowCallee` casts a comptime-known function value into the result restricted function type, checking that the signatures are compatible and adding a callee to the set of possible callees for that restricted function type when analyzed.

It would be checked illegal behavior to make an indirect call through a pointer to a restricted function type when the value of that pointer is not in the set of possible callees that were analyzed during compilation. Additionally, restricted function types would be required to be `callconv(.auto)`, because this feature is not designed to handle function pointers passed to and from external code, and to facilitate the aforementioned safety checks.

As an example implementation in the llvm backend, a metadata node forward reference could be reserved the first time an indirect call to a given restricted function type is encountered, and attached to every indirect call through that restricted function type. Then, during flush, these could be iterated and each metadata node filled in with all of the callees collected by the frontend.

## ~~Open~~ Questions

* ~~Does this prevent an interface such as `std.mem.Allocator` from being passed to another zig compilation unit? I believe this wouldn't work in the future anyway, because non-extern struct types would not be compatible between different ZCUs, due to the not-yet-implemented type tags not matching.~~ *This is indeed already illegal behavior.*

* ~~How does this interact with incremental? Incrementally adding callees seems simple enough, but can we incrementally remove a callee when all instances of `@allowCallee` for a given function become no longer referenced? Just leaving it in the callee set wouldn't work since that would cause a false negative a safety check.~~ *Using restricted function pointers that are distinct from the original function pointer makes this a non-issue.*

* ~~Is it useful to introduce a "restricted function pointer cast" that casts a runtime known normal function pointer into a compatible restricted function pointer and safety checks that the runtime function pointer is one of the analyzed allowed callees? Depending on how the indirect call safety check is implemented, this could be trivial or difficult.~~ *Not considered useful at this time.*