I learned a lot by writing my own packages and trying to hack the package manager to work with a dynamic library. Special thanks to @InKryption for help answer questions on the package manager

It’s useful to read someone’s experience with the build system API. Thank you for documenting this.

Here are some tips:

Luckily the easiest way I found is just to put any

hashthere initially and thenzig buildwill complain and give you the correct hash. I know it’s not ideal until all package author follows what Zap does by listing thehashin the release notes or the README.

Suggested workflow is to omit the hash field from the zon file entirely, and zig will tell the expected hash, which is a similar workflow to what you figured out here. Also planned is a subcommand to compute the hash, so if you are publishing the package on your same computer as usage, there will be no need for Trust On First Use.

// duck has exported itself as duck

// now you are re-exporting duck

// as a module in your project with the name duck

exe.addModule("duck", duck.module("duck"));

I think this is unnecessarily confusing. Better example would be to use b.addModule or exe.addAnonymousModule.

// you need to link to the output of the build process

// that was done by the duck package

// in this case, duck is outputting a library

// to which your project need to link as well

exe.linkLibrary(duck.artifact("duck"));

This snippet will not be needed after #16206 is implemented.

Another fact that might be worth mentioning is dependencies listed in build.zig.zon can be directly imported with @import in build.zig, which accesses the dependency’s build.zig as a namespace.

I will split the project into 3 packages A, B, and C.

Arguably, unless you have more than a few kilobytes of .zig files in B, then B and C should be merged into one package. What is the purpose of separating them?

The only justification for this would be if you want to sometimes rely on duckdb being provided by package C and sometimes be provided by the system package manager. I think if you are going to separate into three packages like this instead of two for introductory material, you should include that context, otherwise stick with only two packages.

libduckdb.so

libduckdb-linux-amd64

In general, zig packages should be cross-platform and build from source. Of course, it’s possible to ship binaries, but is unusual. DuckDB appears to be open source to me, which means that it would be better for the package to ship source code and provide a static library, rather than shipping a dynamically linked library. This will also be a means for making it cross-platform.

- The most important part and the hackiest part is that you need to use the constructs used for header files to install the library.

Nobody should be doing this. I think it is harmful to tell users to do this with an authoritative tone. You’re leaving bad advice on the Internet that risks being mine to clean up for years to come.

Here is a some guidance: if it’s hacky, don’t suggest for people to do it, especially for an API that is clearly malleable. Figure out a non-hacky way to do it, perhaps involving sending patches upstream to help iron out a use case.

I stopped reading after this point because using the header file APIs for non-headers was too cringe-inducing.

I’m happy to help you or anybody else with build system stuff, especially when you run into problems or limitations, but only if you’re using the system as intended. Your post reads as if the design of the build system forced your hand here, but that was not the case.

Hi Andrew,

Thanks for replying and I’ll modify the article based on some of your suggestions.

I’ll give a more detailed reply later but to address why do I use installLibraryHeaders to install the dynamic library is because I couldn’t find another alternative. I’ve poured into the source code and API but I could find any other way right now. It’s possible I missed it so I even asked @InKryption for help by posting my question on zig-help for an alternative. I also posted on zig issues.

I do believe eventually all the most common cases including building from a binary dynamic library will be covered easily.

As for the splitting into A, B, and C, it’s common to isolate external dependency into it’s own package A, and then have a wrapper library B of the language you are using as a package that depends on that dependency. That way other projects other than this one C can also use B and A. There can be argument that A and B can be combined into one. I just happened to like separating them as potentially I might be able to get a static library and put in A without changing much of B.

Thank you again. As for the tone of the post, it could just be I didn’t think it was authoritative “do it this way” but more “I had to do this today to make it work”. Maybe I should be careful of the words I used. ![]()

Absolutely! If it’s not “cringy inducing,” I wouldn’t have to calling it a hacky thing. ![]()

If I know more of zig internals I totally would contribute more but it’s a process and as you can tell I’ve been trying my best to be part of the zig community.

I’m helping where I can while learning the ins and outs of Zig itself. I’m submitting patches to what I can figure out, and asking questions and taking suggestions.

I think that my reply came off with a harsh tone, and I’m sorry for that. The important thing is that we both agree on working together to solve this use case without any hacks!

For a starting point, it would be helpful for me to understand where you got stuck before resorting to hacky stuff. Can you explain a bit more the problem statement?

So my problem statement is simple. I’d like to be able to incorporate the dynamic library in my build but separate the dynamic library and the wrapper in their respective packages separate from the main project.

If I separate them into 3 projects, then libduckdb.so doesn’t get exposed to the next layer using either artifact nor module.

- Project A, libduckdb needs to expose libduckdb.so

- Project B, libduck needs to be built based on libduckdb.so and expose both libduck.a and libduckdb.so

- Project C needs to be built using both libduck.a and libduckdb.so

Basically, just like how the current build system allows you to use addLibraryHeaders(), I need a way to have something similar like addDynamicLibraries().

In addition, for project A, there should be a way to not require either a source code or a linking step in order to create an artifact. An artifact can also be a set of steps that “copies” over files to destination directory.

For project C, the dynamic library libduckdb.so needs to be part of the artifact of project C to be part of the destination directory.

In the interest of keeping discussion in the public so @andrewrk doesn’t have to repeat himself when he helps out, I’ve copied the discussion to the Github Issue Package manager: Build needs to include dynamic libraries as dependencies as Step · Issue #16172 · ziglang/zig · GitHub

so we can keep track of everything there.

Ed and I had a discussion in zig.news. I have a thinking, that whether we can eliminate the requirement of a tarball package from dependency in zon’ design.

In my experience, many OSS projects maintain downloadable packages only for official releases, while in real-world, developers may depend on dev/unstable builds for getting new features faster (e.g., zigmod project depends on zig’s master branch). If an upstream dependency does not maintain a tarball for unstable branches, developers using zon can’t get correct dependencies from upstream, unless they are willing to create upstream package themselves.

So I’m thinking whether we can borrow the idea from go mod. Go claims dependencies in URL + verison manner. The URL usually points to a path to Git repository, while version is interpreted as a Git tag. It’s also possible to claim version as @latest in go.mod to receive the latest commit from master/main branch. Comparing with current zon approach, the go.mod way offers more flexibilities to allow we trace unstable upstream closedly.

(And a side benefit: Sometimes we can see early projects do not even package official releases, but stays in a 0.1.x stage for long time. In Go world it’s completely fine to reference these dependencies because we just need URL + tag).

A second benefit is for validation. The current zon design requires developers maintain a hash with corresponding dependency URL. This is necessary for integrity. However, a remote Git URL+tag should have already ensures integrety. If Zon directly points to a Git URL + tag, or Git URL + commit ID, we can leverage Git server to ensure the integrity.

Does our community think about similar approaches above? Or, is there any discussion to show why the current zon design choose a tarball+hash approach?

(see also the linked issues from there)

More generally:

Here is just a quick tip, until the alternative methods are supported.

For github repositories you can pick any specific arbitrary commit for example GitHub - raysan5/raylib at 44659b7ba8bd6d517d75fac8675ecd026f713240 click on code, than right click > copy link on “Download ZIP” this gets you a url for a zip download, however you can change the .zip extension to .tar.gz and it seems to return that instead.

I used that so I could pick a newer raylib version (after the tagged 4.5 release) that works with recent zig versions. I imagine gitlab or other repository hosting sites may have a similar ways to get an url that gives you the tarball. It helps bridge the time until all those features are added.

Thanks @Sze for the info. You guide me a Github feature I know but never realize its usage!

I may be wrong, but I don’t remember Gitlab has equivalent feature to convert a commit ID to package. I pasted a commit of a public Gitlab project as an example. As far as I know, Bitbucket does not have this feature either. I tend to think the “Download as Zip” is Github specific.

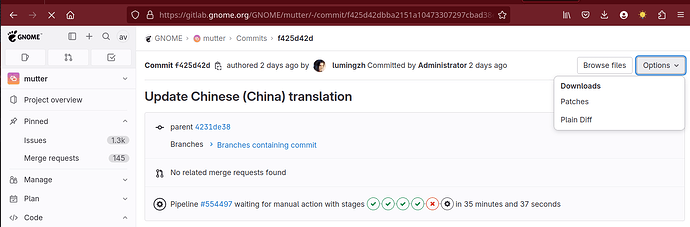

URL: Update Chinese (China) translation (f425d42d) · Commits · GNOME / mutter · GitLab

And thanks the hints from @squeek502! Yes this issue looks more like what I’m looking for. Let me check the details.

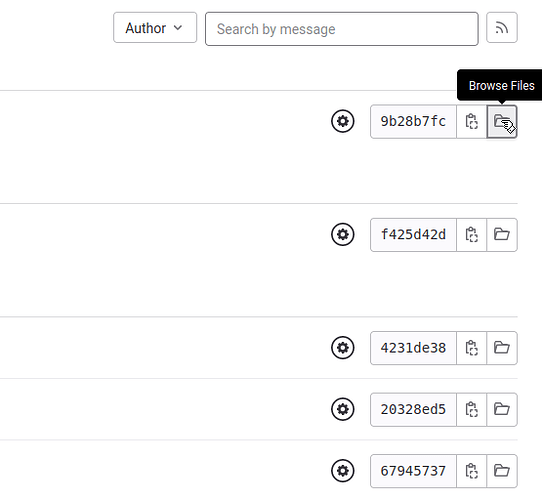

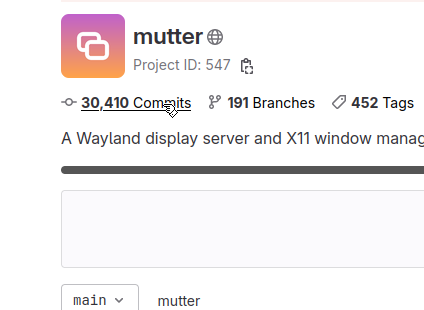

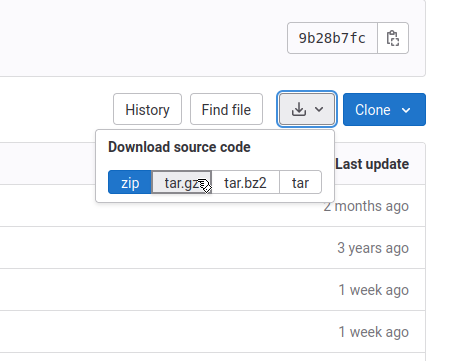

I just looked and gitlab actually has better support for this:

first click on commits:

then browse files on the wanted commit:

then download → tar.gz:

You also can click on Files in the sidebar on the left. It seems there is a files and “Commit/Patch” view. Only the former gives you archive download options.

Thanks @Sze for pointing out this! I did see this previously, but didn’t notice it can be used by API. You are right. I did a quick check. Gitlab allows a wget command to download a file in a format below. I didn’t notice it before.

wget https://gitlab.gnome.org/GNOME/mutter/-/archive/<GitCommit>/mutter-<GitCommit>.tar.gz

Now we have Gitlab and Github supporting downloading a snapshot of commit as packages, but my question still stands. Can we assume it a standard Git feature or a feature of specific hosting services?

I can see the Gitlab URL above is significantly different with Github. In Github, the format looks like examples below (They are from build.zig.zon in zls repo). What concerns me more is, I’m not sure whether the generated URLs can be safely treated as a stable API, that they will not change with server upgrade in the future.

https://github.com/ziglibs/diffz/archive/90353d401c59e2ca5ed0abe5444c29ad3d7489aa.tar.gz",

https://gist.github.com/antlilja/8372900fcc09e38d7b0b6bbaddad3904/archive/6c3321e0969ff2463f8335da5601986cf2108690.tar.gz",

To me, I expect we avoid developers maintaining these URL formats. A config like “RepoURL + comitID” is a interface with better consistency to developers. Feel free to correct me if I’m wrong.

git-archive is a standard feature of git and can operate remotely over git and git+ssh, but not, AFAIK, git+http:

$ file foo

foo: cannot open `foo' (No such file or directory)

$ git archive -o foo --remote https://github.com/ruby/ruby.git master

fatal: operation not supported by protocol

$ git archive -o foo --remote https://git.sr.ht/~mil/mepo master

fatal: operation not supported by protocol

$ git archive -o foo --remote https://github.com/ruby/ruby.git master

fatal: operation not supported by protocol

$ git archive -o foo --remote git@gitlab.com:fjc/gem-ext-zig_builder.git master

Enter passphrase for key:

$ file foo

foo: POSIX tar archive

Pure-HTTP downloads is hosting service specific; generally implemented as a wrapper aroung git-archive:

https://gitlab.com/gitlab-org/gitaly/-/blob/master/internal/gitaly/service/repository/archive.go#L240

https://git.sr.ht/~sircmpwn/git.sr.ht/tree/0.84.3/item/gitsrht/blueprints/repo.py#L439

https://github.com/lunny/gitea/blob/master/modules/git/repo_archive.go#L58

I think an established hosting provider is not likely to change the archive URL lightly as they know that it would greatly upset their users. Many packaging systems consume those URLs:

https://gitlab.alpinelinux.org/alpine/aports/-/blob/master/testing/mepo/APKBUILD?ref_type=heads#L19

https://github.com/flathub/com.milesalan.mepo/blob/master/com.milesalan.mepo.yml#L51

https://aur.archlinux.org/cgit/aur.git/tree/PKGBUILD?h=mepo#n14

One thing that has been an issue, is that git-archive does not guarantee that the produced archives will be bit-identical from run to run; the checksum of the tar/zip file can change:

https://github.com/orgs/community/discussions/45830

https://blog.bazel.build/2023/02/15/github-archive-checksum.html

https://github.blog/changelog/2023-01-30-git-archive-checksums-may-change/

https://github.blog/2023-02-21-update-on-the-future-stability-of-source-code-archives-and-hashes/

If you rely on stable archives only for reproducibility (ensuring you always get identical files inside your archive), then we recommend you download source archives … with a commit ID for the :ref parameter. … the commit ID ensures you’ll always get the same file contents inside the archive … tarball and zipball formats have built-in protections against truncation, and TLS (by way of HTTPS) protects against corruption of the archive.

If you rely on stable archives for security (ensuring you don’t accidentally trigger a tarbomb, for example), we recommend you switch to release assets instead of using source downloads. … If relying on release assets isn’t possible, we urge you to consider designs that can accommodate (infrequent) future hash changes.

Thanks @fjc for the info!

Actually you pointed out exactly my concern. As we are talking about a package management that may rely mostly on ssh+http (Github, Gitlab, many…), we need a stable interface because Zig community has no control on server side.

Regarding to tarball – just as you pointed out. an HTTP wrapper around git+ssh will exist in most cases, but a) they are not standard, varies between servers, and b) you also pointed out that checksum can change. This is not an ideal solution for integrety check purpose.

(Actually the checksum problem is what I never awared before, thank you for enriching my knowledge!)

The URL stability is another concern. I admit I may be overreacted on this. We may safely assume the service hosters can keep it unchanged. To be honest, I came from a community that relies on this behavior. Let’s take it as an example.

Xmake, a C++ build tool, maintains a repo of popular C/C++ packages. They implement this by keeping thedownloadable URL of every versioned source code release from Internet. You may find them at GitHub - xmake-io/xmake-repo: 📦 An official xmake package repository. However, the Zon case and xmake-repro still have a key difference. Xmake-repo keeps the direct URLs internally, but exposes only a string identifier like “zlib 1.0.0”. This indirection adds a protection, that if an URL is reallly changed some time, we just need xmake-repo update the URL, and the package users can keep their code unchanged. This is not the case for Zig, because Zon exposes direct URLs in package users. It adds a rick - though unlikely to happen in theory - that a change from server side can force package users to update their commits across branches.

The go.mod example I shown to Ed in original posts, though it has different implementation with xmake-repro, shares largely the same idea: a) we should avoid package user maintaining direct downloadable URLs, b) integrity is crtical. The current interface used by zon works, but a bit fragile.

I wrote a follow-up article that mainly used modules to give access to the header and library file. Although it’s still a little hacky it at least doesn’t use Build.installHeaderFiles to install library files. This is what I can come up with with 0.11.0. Looking forward to 0.12.0 which introduces path in addition to module and artifact for accessing files that are not part of compilation or linking.